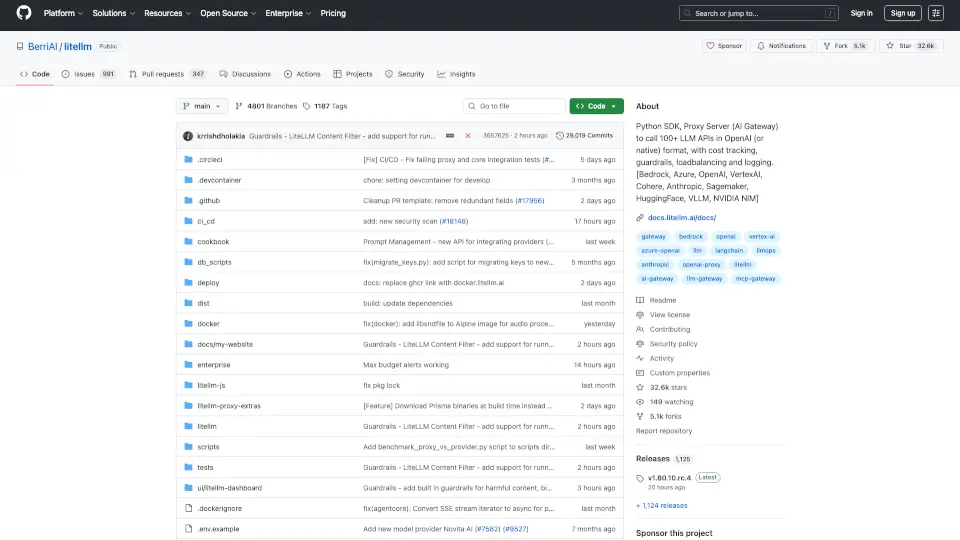

What is BerriAI-litellm?

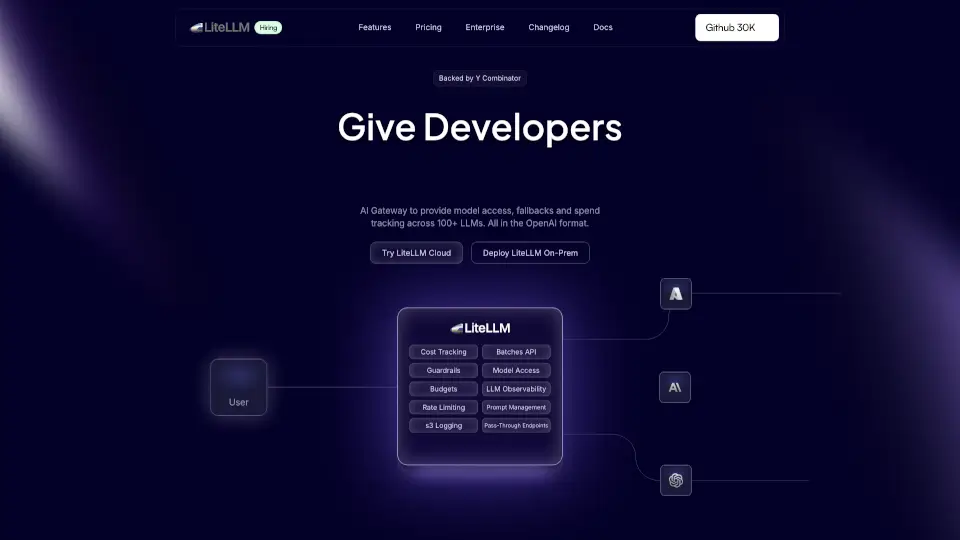

LiteLLM is a powerful Python SDK and Proxy Server designed to seamlessly connect with over 100 LLM APIs, including popular platforms like OpenAI, Azure, and HuggingFace. It simplifies the process of calling these APIs, making it easier for developers to integrate advanced language models into their applications.

What are the features of BerriAI-litellm?

- Multi-Provider Support: Connects to various LLM APIs in a unified format.

- Consistent Output: Ensures responses are always structured the same way, making it easier to handle.

- Retry Logic: Automatically retries requests across different providers to enhance reliability.

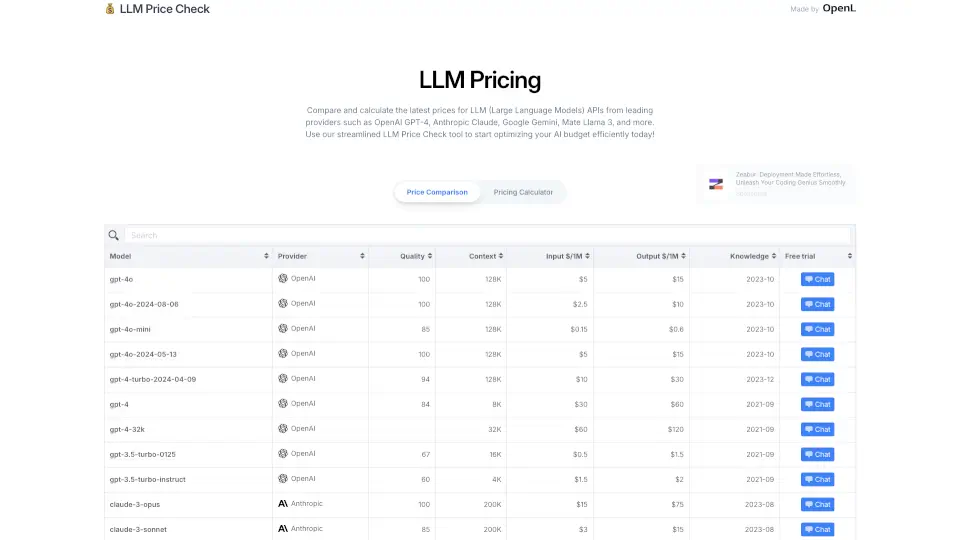

- Cost Tracking: Monitors usage and expenses across multiple projects.

- Rate Limiting: Set budgets and limits for API usage to manage costs effectively.

What are the use cases of BerriAI-litellm?

- Chatbots: Build intelligent chatbots that can interact with users using natural language.

- Content Generation: Generate articles, stories, or any text-based content quickly and efficiently.

- Data Analysis: Use LLMs to analyze and summarize large datasets or documents.

- Educational Tools: Create applications that assist with learning and provide explanations on various topics.

How to use BerriAI-litellm?

- Install LiteLLM: Use

pip install litellmto get started. - Set Up Environment Variables: Configure your API keys for the services you want to use.

- Make API Calls: Use the provided functions to send messages and receive responses from the models.

- Explore Streaming: For real-time applications, enable streaming to get responses as they are generated.