Qu'est-ce que Berri ?

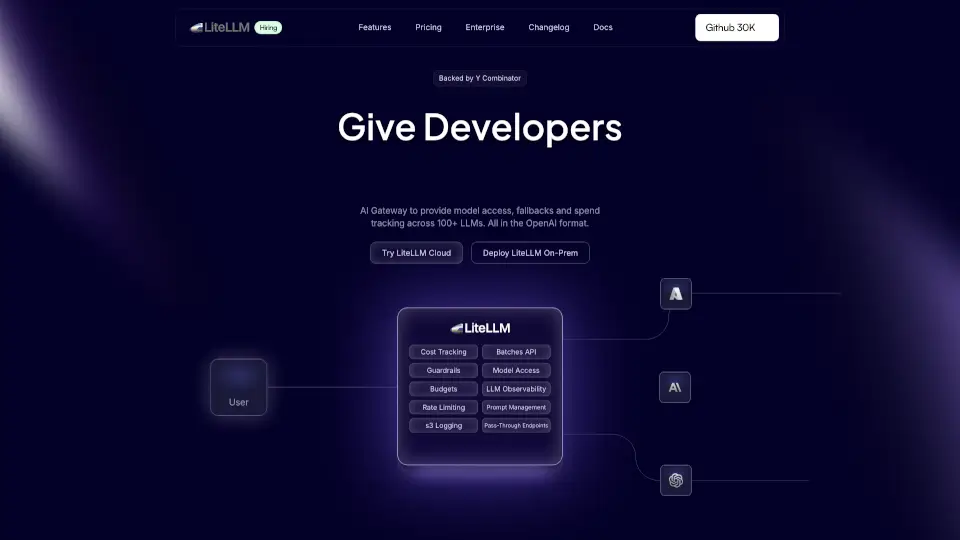

LiteLLM est une solution innovante qui simplifie l'accès aux modèles de langage, le suivi des dépenses et les mécanismes de secours pour plus de 100 LLMs. Conçu pour les développeurs, il offre une compatibilité totale avec le format OpenAI, tout en intégrant des fonctionnalités avancées comme l'équilibrage de charge et le suivi des coûts.

Quelles sont les caractéristiques de Berri ?

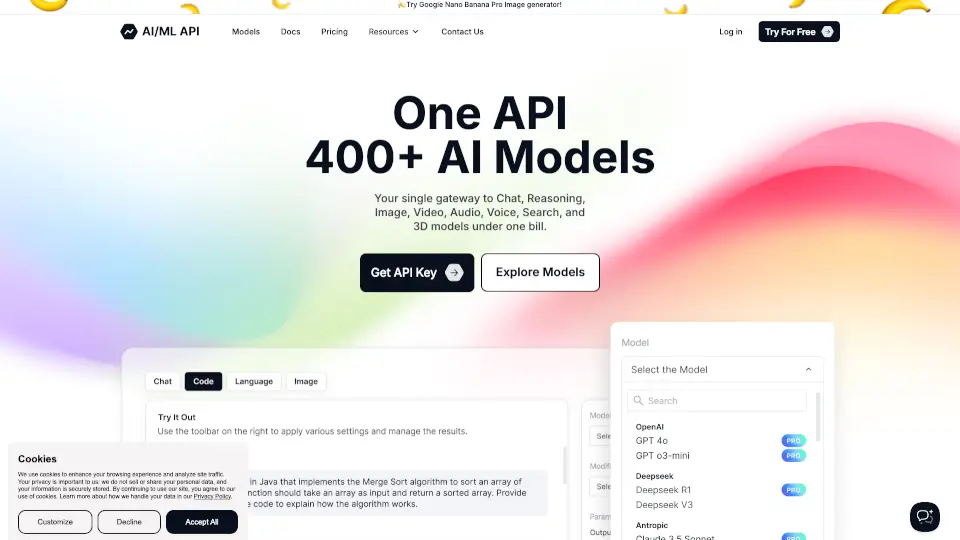

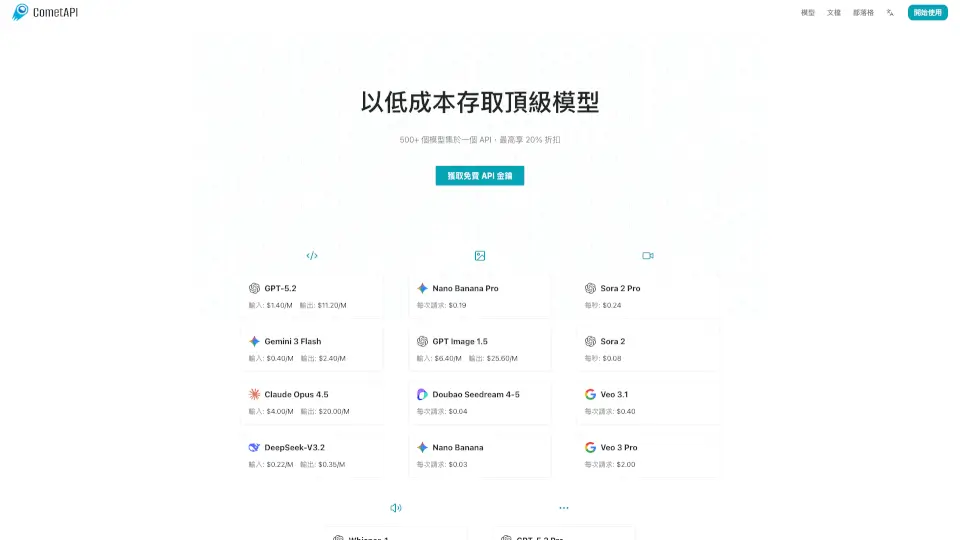

- Accès unifié: Accédez à plus de 100 LLMs, y compris Azure, Gemini, Bedrock et OpenAI, via une seule interface.

- Suivi des dépenses: Gérez et suivez les budgets par modèle, clé ou équipe.

- Équilibrage de charge: Optimisez les performances avec des mécanismes d'équilibrage de charge intégrés.

- Portail d'autogestion: Permettez aux équipes de gérer leurs propres clés en production.

- Compatibilité OpenAI: Utilisez les LLMs via des endpoints compatibles avec OpenAI.

Quels sont les cas d'utilisation de Berri ?

- Migration de projets vers des endpoints proxy avec suivi des dépenses.

- Gestion centralisée des accès aux modèles pour les équipes de développement.

- Suivi des performances et des coûts pour les projets basés sur l'IA.

Comment utiliser Berri ?

- Installez LiteLLM via Docker ou le SDK Python.

- Configurez les clés virtuelles et les budgets pour vos modèles.

- Utilisez les endpoints compatibles OpenAI pour accéder aux LLMs.

- Suivez les dépenses et les performances via le portail d'autogestion.