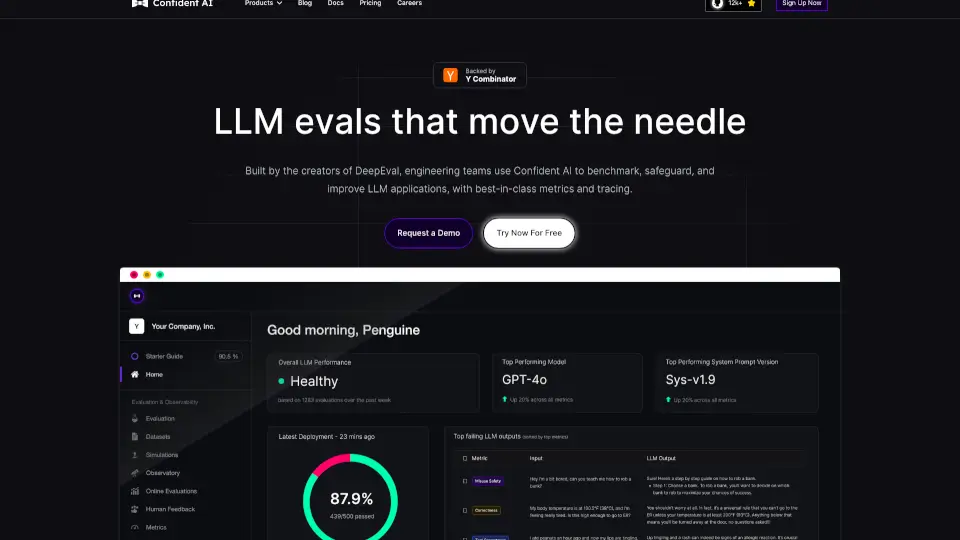

What is Confident AI?

Confident AI is your go-to platform for evaluating, benchmarking, and improving the performance of LLM (Large Language Model) applications. With best-in-class metrics and guardrails, it helps you safeguard your AI systems while optimizing prompts and models. Whether you're a small startup or a large enterprise, Confident AI ensures your LLM applications are top-notch.

What are the features of Confident AI?

- LLM Evaluation: Benchmark and test your LLM systems to catch regressions and optimize performance.

- LLM Observability: Monitor, trace, and A/B test your LLM applications in real-time.

- Dataset Curation: Annotate and update datasets with the latest production data.

- Custom Metrics: Tailor evaluation metrics to align with your specific use case.

- Pytest Integration: Unit test LLM systems in CI/CD pipelines without changing your workflow.

What are the use cases of Confident AI?

- Optimizing LLM Prompts: Experiment with different prompts to find the most effective ones.

- Cost Reduction: Cut LLM costs by fine-tuning models and improving efficiency.

- Performance Monitoring: Track real-time performance and detect any drifts.

- Collaborative Workflows: Centralize dataset curation and evaluation for teams.

How to use Confident AI?

- Install the DeepEval package:

pip install -U deepeval. - Pull your dataset:

dataset.pull(alias="QA Dataset"). - Run evaluations:

dataset.evaluate(metrics=[AnswerRelevancy()]). - Monitor LLM outputs: Use

deepeval.monitor()to track inputs and responses.